- Passive Transformation

- Connected and Unconnected Transformation

- Stored procedures are stored and run within the database.

Use of Stored Procedure in mapping:

- Check the status of a target database before loading data into it.

- Determine if enough space exists in a database.

- Perform a specialized calculation.

- Drop and recreate indexes. Mostly used for this in projects.

Input/output parameters: Parameters we give as input and the parameters returned from Stored Procedure.

Return values: Value returned by Stored Procedure if any.

Status codes: Status codes provide error handling for the IS during a workflow. The stored procedure issues a status code that notifies whether or not the stored procedure completed successfully. We cannot see this value. The IS uses it to determine whether to continue running the session or stop. Specifying when the Stored Procedure Runs

Normal: The stored procedure runs where the transformation exists in the mapping on a row-by-row basis. We pass some input to procedure and it returns some calculated values. Connected stored procedures run only in normal mode.

Pre-load of the Source: Before the session retrieves data from the source, the stored procedure runs. This is useful for verifying the existence of tables or performing joins of data in a temporary table.

Post-load of the Source: After the session retrieves data from the source, the stored procedure runs. This is useful for removing temporary tables.

Pre-load of the Target: Before the session sends data to the target, the stored procedure runs. This is useful for dropping indexes or disabling constraints.

Post-load of the Target: After the session sends data to the target, the stored procedure runs. This is useful for re-creating indexes on the database.

Using a Stored Procedure in a Mapping :

- Create the stored procedure in the database.

- Import or create the Stored Procedure transformation.

- Determine whether to use the transformation as connected or unconnected.

- If connected, map the appropriate input and output ports.

- If unconnected, either configure the stored procedure to run pre- or post-session, or configure it to run from an expression in another transformation.

- Configure the session.

Connect to Source database and create the stored procedures given below:

CREATE OR REPLACE procedure sp_agg (in_deptno in number, max_sal out number,

min_sal out number, avg_sal out number, sum_sal out number)

As

Begin

select max(Sal),min(sal),avg(sal),sum(sal) into max_sal,min_sal,avg_sal,sum_sal

from emp where deptno=in_deptno group by deptno;

End;

/

CREATE OR REPLACE procedure sp_unconn_1_value(in_deptno in number, max_sal out number)

As

Begin

Select max(Sal) into max_sal from EMP where deptno=in_deptno;

End;

/

1. Connected Stored Procedure T/F

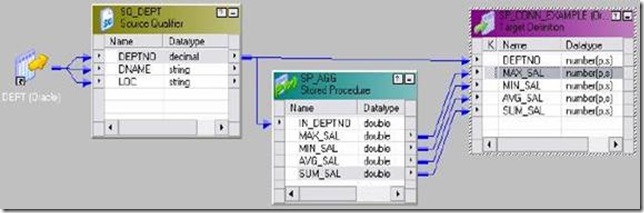

Example: To give input as DEPTNO from DEPT table and find the MAX, MIN, AVG and SUM of SAL from EMP table.

- DEPT will be source table. Create a target table SP_CONN_EXAMPLE with fields DEPTNO, MAX_SAL, MIN_SAL, AVG_SAL & SUM_SAL.

- Write Stored Procedure in Database first and Create shortcuts as needed.

1. Open folder where we want to create the mapping.

2. Click Tools -> Mapping Designer.

3. Click Mapping-> Create-> Give name. Ex: m_SP_CONN_EXAMPLE

4. Drag DEPT and Target table.

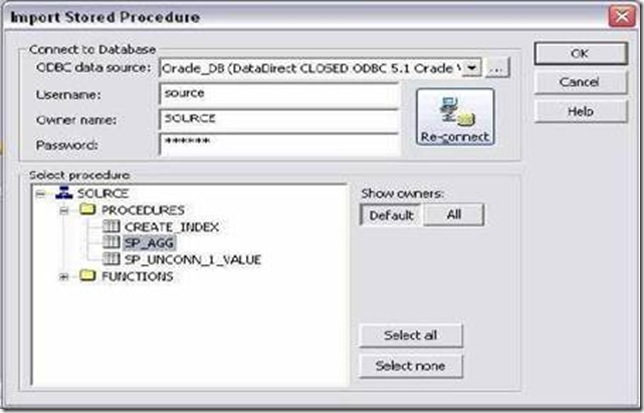

5. Transformation -> Import Stored Procedure -> Give Database Connection -> Connect -> Select the procedure sp_agg from the list.

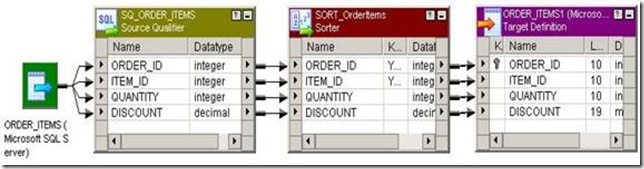

6. Drag DEPTNO from SQ_DEPT to the stored procedure input port and also to DEPTNO port of target.

7. Connect the ports from procedure to target as shown below:

8. Mapping -> Validate

9. Repository -> Save

- Create Session and then workflow.

- Give connection information for all tables.

- Give connection information for Stored Procedure also.

- Run workflow and see the result in table.

2. Unconnected Stored Procedure T/F :

An unconnected Stored Procedure transformation is not directly connected to the flow of data through the mapping. Instead, the stored procedure runs either:

- From an expression: Called from an expression transformation.

- Pre- or post-session: Runs before or after a session.

- Assign the output value to a local variable.

- Assign the output value to the system variable PROC_RESULT. (See Later)

- DEPT will be source table.

- Create a target table with fields DEPTNO and MAX_SAL of decimal data type.

- Write Stored Procedure in Database first and Create shortcuts as needed.

1. Open folder where we want to create the mapping.

2. Click Tools -> Mapping Designer.

3. Click Mapping-> Create-> Give name. Ex: m_sp_unconn_1_value

4. Drag DEPT and Target table.

5. Transformation -> Import Stored Procedure -> Give Database Connection -> Connect -> Select the procedure sp_unconn_1_value from the list. Click OK.

6. Stored Procedure has been imported.

7. T/F -> Create Expression T/F. Pass DEPTNO from SQ_DEPT to Expression T/F.

8. Edit expression and create an output port OUT_MAX_SAL of decimal data type.

9. Open Expression editor and call the stored procedure as below:Click OK and connect the port from expression to target as in mapping below:

10. Mapping -> Validate

11. Repository Save.

- Create Session and then workflow.

- Give connection information for all tables.

- Give connection information for Stored Procedure also.

- Run workflow and see the result in table.

- If the stored procedure returns a single output parameter or a return value, we the reserved variable PROC_RESULT as the output variable.

:SP.SP_UNCONN_1_VALUE(DEPTNO,PROC_RESULT)

- If the stored procedure returns multiple output parameters, you must create variables for each output parameter.

as output then:

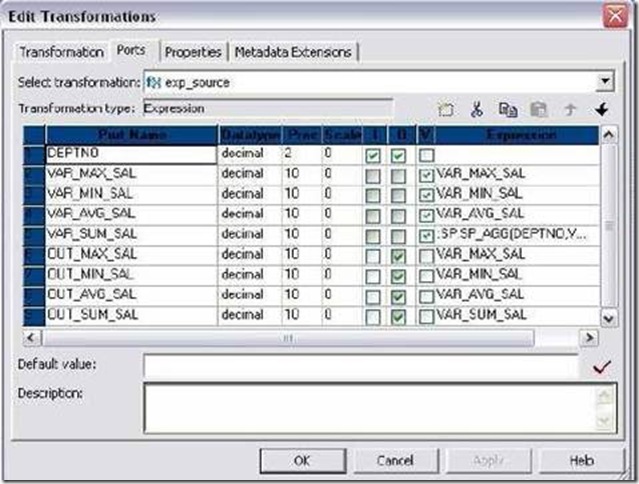

1. Create four variable ports in expression VAR_MAX_SAL,VAR_MIN_SAL, VAR_AVG_SAL and iVAR_SUM_SAL.

2. Create four output ports in expression OUT_MAX_SAL, OUT_MIN_SAL, OUT_AVG_SAL and OUT_SUM_SAL.

3. Call the procedure in last variable port says VAR_SUM_SAL.

:SP.SP_AGG (DEPTNO, VAR_MAX_SAL,VAR_MIN_SAL, VAR_AVG_SAL, PROC_RESULT)

Example 2:

DEPTNO as Input and MAX_SAL, MIN_SAL, AVG_SAL and SUM_SAL as O/P Stored Procedure to drop index in Pre Load of Target Stored Procedure to create index in Post Load of Target

- DEPT will be source table. Create a target table SP_UNCONN_EXAMPLE with fields DEPTNO, MAX_SAL, MIN_SAL, AVG_SAL & SUM_SAL.

- Write Stored Procedure in Database first and Create shortcuts as needed. Stored procedures are given below to drop and create index on target.Make sure to create target table first. Stored Procedures to be created in next example in Target Database:

Create or replace procedure CREATE_INDEX

As

Begin

Execute immediate 'create index unconn_dept on SP_UNCONN_EXAMPLE(DEPTNO)';

End;

/

Create or replace procedure DROP_INDEX

As

Begin

Execute immediate 'drop index unconn_dept';

End;

/

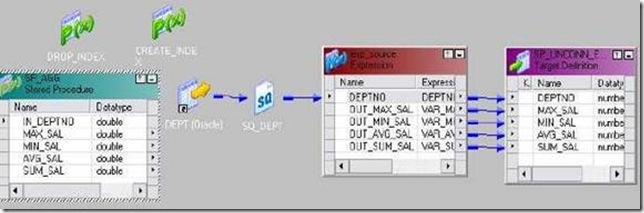

Creating Mapping:

1. Open folder where we want to create the mapping.

2. Click Tools -> Mapping Designer.

3. Click Mapping-> Create-> Give name. Ex: m_sp_unconn_1_value

4. Drag DEPT and Target table.

5. Transformation -> Import Stored Procedure -> Give Database Connection -> Connect -> Select the procedure sp_agg from the list. Click OK.

6. Stored Procedure has been imported.

7. T/F -> Create Expression T/F. Pass DEPTNO from SQ_DEPT to Expression T/F.

8. Edit Expression and create 4 variable ports and 4 output ports as shown below:

9. Call the procedure in last variable port VAR_SUM_SAL.

10. :SP.SP_AGG (DEPTNO, VAR_MAX_SAL, VAR_MIN_SAL, VAR_AVG_SAL, PROC_RESULT)

11. Click Apply and Ok.

12. Connect to target table as needed.

13. Transformation -> Import Stored Procedure -> Give Database Connection for target -> Connect -> Select the procedure CREATE_INDEX and DROP_INDEX from the list. Click OK.

14. Edit DROP_INDEX -> Properties Tab -> Select Target Pre Load as Stored Procedure Type and in call text write drop_index. Click Apply -> Ok.

15. Edit CREATE_INDEX -> Properties Tab -> Select Target Post Load as Stored Procedure Type and in call text write create_index. Click Apply -> Ok.

16. Mapping -> Validate

17. Repository -> Save

- Create Session and then workflow.

- Give connection information for all tables.

- Give connection information for Stored Procedures also.

- Also make sure that you execute the procedure CREATE_INDEX on database before using them in mapping. This is because, if there is no INDEX on target table, DROP_INDEX will fail and Session will also fail.

- Run workflow and see the result in table.

![clip_image002[5] clip_image002[5]](http://lh4.ggpht.com/_MbhSjEtmzI8/Ta8k6Vu7C7I/AAAAAAAAAU0/K_LB4gUcA7U/clip_image002%5B5%5D_thumb%5B3%5D.jpg?imgmax=800)